Iterate with staging and production Workspaces¶

Easy

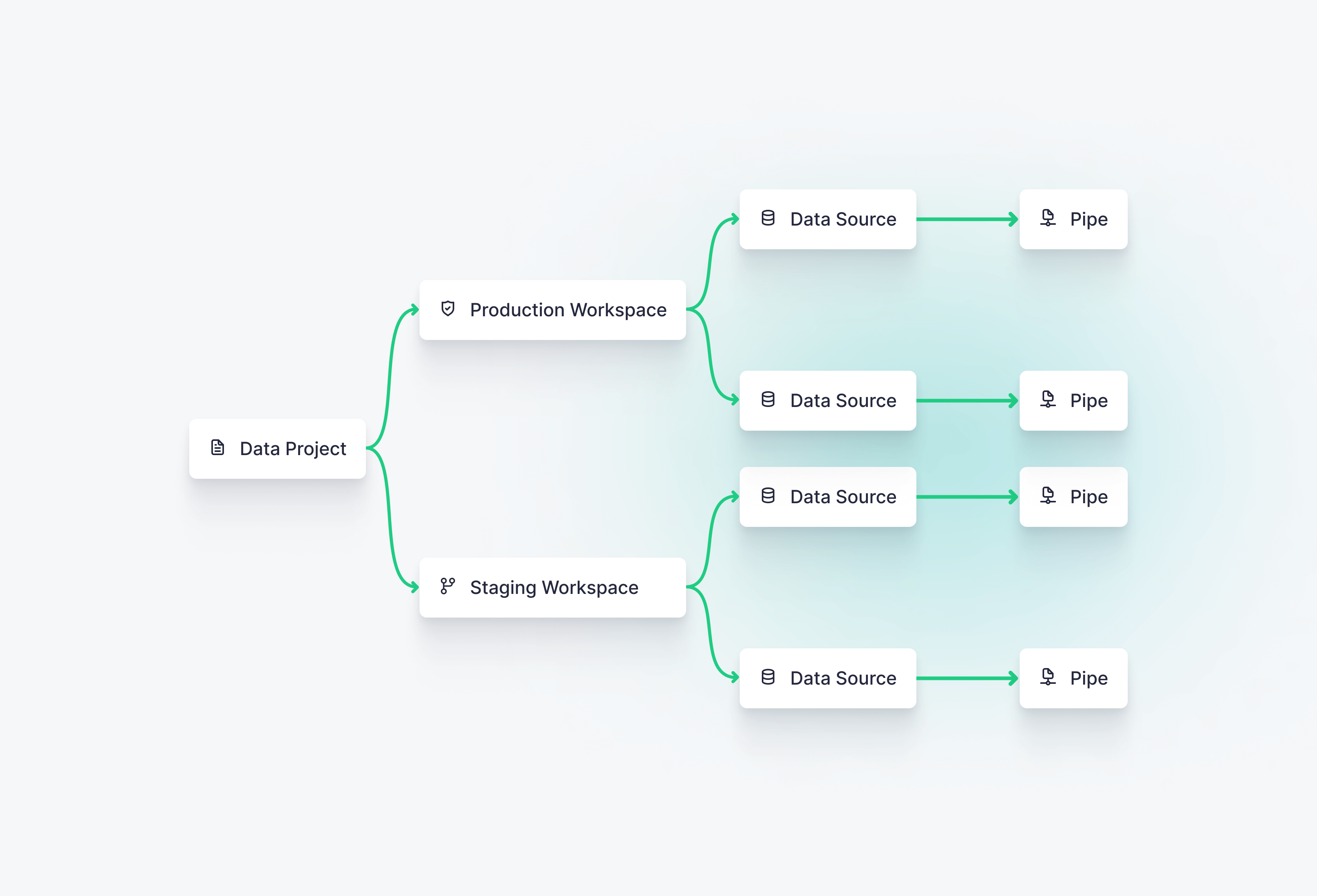

A Data Project can be deployed to different Workspaces using different data (production, staging, etc.).

Organize your Data Project depending on your teams and projects structures and never mix several Data Projects in one Workspace. You can easily share Data Sources from one Data Project to another.

The most common ways to organize Data Projects are:

One Data Project deployed in one Workspace.

One Data Project deployed in multiple Workspace, for instance, pre-production (or staging) and production Workspaces.

One Data Project contains Data Sources which are shared to other Data Sources to build use cases over them. Each Data Project deployed to one or more Workspaces.

In this section we are going to explore how to use staging (or pre-production) and production Workspaces and to integrate them with CI/CD pipelines.

Migrating from prefixes¶

Some time ago there were no Workspaces in Tinybird and the CLI provided a --prefix flag so you could create staging, production or development resources in the same “workspace”.

If you are used to use the --prefix flag, we recommend you to switch to multiple workspaces model for the following reasons:

Depending of your needs, you can create mulitple isolated Workspaces for testing, staging or production.

You’ll have better security out of the box, limiting access to production Workspaces and/or sensible data.

This work model follows modern best practices and is easier to iterate using our CLI and standard tools like Git.

Convinced? This is how you migrate from prefixes to use Workspaces.

Previously to deploy production and staging resources to the same Workspace you’d run comands like these from the CLI:

tb push datasources/events.datasource --prefix staging

tb push datasources/events.datasource --prefix pro

That would create two resources in the same Workspaces: staging__events and pro_events. Then you’d use different data on each Data Source to simulate your production and staging environments.

How this works with Workspaces?

Create production and staging Workspaces

Switch among them from the CLI

Push resources to any of them

That way you have fully isolated staging and production environments

tb workspace create staging_acme --user_token <token>

tb workspace create pro_acme --user_token <token>

Once create you can switch among Workspaces and push resources to them.

When working with multiple Workspaces you can check the current authenticated one with tb workspace current or alternatively you can print the current workspace in your shell prompt.

tb workspace create staging_acme --user_token <token>

tb workspace create pro_acme --user_token <token>

To push resources to the staging Workspace:

tb workspace use staging_acme

tb push --push-deps

To push resources to the production Workspace:

tb workspace use pro_acme

tb push --push-deps

Read more to learn how to integrate both Workspace in a Continuous Integration and Deployment pipeline.

CI/CD with staging and production Workspaces¶

In this guide we are going to go through this set up:

A staging Workspace with staging data. This Workspace is used to integrate changes in your Data Project before landing in the production Workspace.

A production Workspace with production data. This is the Workspace integrated in your Data Product and being used by actual users.

A CI/CD workflow consisting on: Run CI over the staging Workspace (optionally over the production one as well), deploy manually to the staging Workspace for integration purposes, deploy on merge on the production Workspace.

Guide preparation¶

You can follow along using the ecommerce_data_project.

Download the project by running:

git clone https://github.com/tinybirdco/ecommerce_data_project

cd ecommerce_data_project

Then, create a the staging and production workspaces Workspace and authenticate using your user admin token (admin user@domain.com). If you don’t know how to authenticate or use the CLI, check out the CLI Quick Start.

tb workspace create staging_acme --user_token <token>

tb workspace create pro_acme --user_token <token>

Push the Data Project to the production workspace:

tb workspace use pro_acme

tb push --push-deps --fixtures

** Processing ./datasources/events.datasource

** Processing ./datasources/top_products_view.datasource

** Processing ./datasources/products.datasource

** Processing ./datasources/current_events.datasource

** Processing ./pipes/events_current_date_pipe.pipe

** Processing ./pipes/top_product_per_day.pipe

** Processing ./endpoints/top_products.pipe

** Processing ./endpoints/sales.pipe

** Processing ./endpoints/top_products_params.pipe

** Processing ./endpoints/top_products_agg.pipe

** Building dependencies

** Running products_join_by_id

** 'products_join_by_id' created

** Running current_events

** 'current_events' created

** Running events

** 'events' created

** Running products

** 'products' created

** Running top_products_view

** 'top_products_view' created

** Running products_join_by_id_pipe

** Materialized pipe 'products_join_by_id_pipe' using the Data Source 'products_join_by_id'

** 'products_join_by_id_pipe' created

** Running top_product_per_day

** Materialized pipe 'top_product_per_day' using the Data Source 'top_products_view'

** 'top_product_per_day' created

** Running events_current_date_pipe

** Materialized pipe 'events_current_date_pipe' using the Data Source 'current_events'

** 'events_current_date_pipe' created

** Running sales

** => Test endpoint at https://api.tinybird.co/v0/pipes/sales.json

** 'sales' created

** Running top_products_agg

** => Test endpoint at https://api.tinybird.co/v0/pipes/top_products_agg.json

** 'top_products_agg' created

** Running top_products_params

** => Test endpoint at https://api.tinybird.co/v0/pipes/top_products_params.json

** 'top_products_params' created

** Running top_products

** => Test endpoint at https://api.tinybird.co/v0/pipes/top_products.json

** 'top_products' created

** Pushing fixtures

** Warning: datasources/fixtures/products_join_by_id.ndjson file not found

** Warning: datasources/fixtures/current_events.ndjson file not found

** Checking ./datasources/events.datasource (appending 544.0 b)

** OK

** Checking ./datasources/products.datasource (appending 134.0 b)

** OK

** Warning: datasources/fixtures/top_products_view.ndjson file not found

Finally push the Data Project to the staging workspace:

tb workspace use staging_acme

tb push --push-deps --fixtures

One you have the Data Project deployed to both Workspaces make sure you connect them to Git and push the CI/CD pipelines to the Git repo.

Configuring CI/CD¶

As you might have learned from the working with git guide, we provide CI/CD jobs and pipelines via this Git repository

With those jobs in mind, you can include your staging Workspace into your CI/CD pipeline in many ways. A common pattern consists on running CD in the staging Workspace before landing to the production Workspace.

You can add a new job that can be run manually like this (for the case of GitHub):

on:

workflow_dispatch:

jobs:

staging_cd: # deploy changes to staging workspace

uses: tinybirdco/ci/.github/workflows/cd.yml@v1.1.4

with:

tb_deploy: false

data_project_dir: .

secrets:

admin_token: ${{ secrets.ST_ADMIN_TOKEN }} # set (admin user@domain.com) token from the staging workspace in a new secret

tb_host: https://api.us-east.aws.tinybird.co

This job will run the deployment pipeline defined but using the admin token from the staging Workspace. That way you can test out deployments and data migrations in a non production Workspace first.

In case of errors in the CD pipeline, or when the staging Workspace is left in an inconsistent state, you can just drop the Workspace and recreate it from the Data Project.

What’s the difference between a staging Workspace and an Environment?¶

At this point you might be asking that question. When I need a staging Workspace or an Environment.

Environments are meant to be ephemeral either to test out changes in your data flow without affecting your Main Workspace or to automate tasks such us CI/CD pipelines.

The usual workflow of an Environment is:

Create it either manually or through an automated CI/CD pipeline

Deploy some changes

Test them out

Destroy the Environment

Environments will eventually have a decay policy so they are not left behind inadvertently.

On the other hand Workspace are meant to be permament. Your production Workspace receives ingestion and requests to API endpoints from your production Data Product.

Staging Workspaces on the other hand, are optional and might cover different purposes:

You don’t want to test with your production data, so you have a separate well known subset of data in staging. This is good for anonymization, speed on testing or deterministic outputs.

You want to integrate development versions of the Data Project into your Data Product before landing to the production Workspace.

You want to test out some complex deployment or data migration before landing to the production Workspace.

In some cases Environments might be a quick substitute for a staging Workspace, in any case consider the pros and cons of each one to better organize your pipelines.