Implementing test strategies¶

Intermediate

In the versioning your Pipes guide you learned how to use versioning as part of the usual development workflow of your API Endpoints.

In this guide you’ll learn about different strategies for testing your Data Project.

Guide preparation¶

You can follow along using the ecommerce_data_project.

Download the project by running:

git clone https://github.com/tinybirdco/ecommerce_data_project

cd ecommerce_data_project

Then, create a new Workspace and authenticate using your user admin token (admin user@domain.com). If you don’t know how to authenticate or use the CLI, check out the CLI Quick Start.

tb auth -i

** List of available regions:

[1] us-east (https://ui.us-east.tinybird.co)

[2] eu (https://ui.tinybird.co)

[0] Cancel

Use region [1]: 2

Copy the admin token from https://ui.tinybird.co/tokens and paste it here :

Finally, push the data project to Tinybird:

tb push --push-deps --fixtures

** Processing ./datasources/events.datasource

** Processing ./datasources/top_products_view.datasource

** Processing ./datasources/products.datasource

** Processing ./datasources/current_events.datasource

** Processing ./pipes/events_current_date_pipe.pipe

** Processing ./pipes/top_product_per_day.pipe

** Processing ./endpoints/top_products.pipe

** Processing ./endpoints/sales.pipe

** Processing ./endpoints/top_products_params.pipe

** Processing ./endpoints/top_products_agg.pipe

** Building dependencies

** Running products_join_by_id

** 'products_join_by_id' created

** Running current_events

** 'current_events' created

** Running events

** 'events' created

** Running products

** 'products' created

** Running top_products_view

** 'top_products_view' created

** Running products_join_by_id_pipe

** Materialized pipe 'products_join_by_id_pipe' using the Data Source 'products_join_by_id'

** 'products_join_by_id_pipe' created

** Running top_product_per_day

** Materialized pipe 'top_product_per_day' using the Data Source 'top_products_view'

** 'top_product_per_day' created

** Running events_current_date_pipe

** Materialized pipe 'events_current_date_pipe' using the Data Source 'current_events'

** 'events_current_date_pipe' created

** Running sales

** => Test endpoint at https://api.tinybird.co/v0/pipes/sales.json

** 'sales' created

** Running top_products_agg

** => Test endpoint at https://api.tinybird.co/v0/pipes/top_products_agg.json

** 'top_products_agg' created

** Running top_products_params

** => Test endpoint at https://api.tinybird.co/v0/pipes/top_products_params.json

** 'top_products_params' created

** Running top_products

** => Test endpoint at https://api.tinybird.co/v0/pipes/top_products.json

** 'top_products' created

** Pushing fixtures

** Warning: datasources/fixtures/products_join_by_id.ndjson file not found

** Warning: datasources/fixtures/current_events.ndjson file not found

** Checking ./datasources/events.datasource (appending 544.0 b)

** OK

** Checking ./datasources/products.datasource (appending 134.0 b)

** OK

** Warning: datasources/fixtures/top_products_view.ndjson file not found

Once you have the Data Project deployed to a Workspace make sure you connect it to Git and push the CI/CD pipelines to the repository. The next sections assume tests are being run in a CI pipeline although you can run the very same CLI commands on your local terminal when connected to the remote Workspace.

Regression Tests¶

When one of your API Endpoints is integrated in a production environment (a web or mobile application, a dashboard, etc.), you want to make sure that any change in the Pipe doesn’t change the output of the endpoint.

In other words, you want the same version of an API Endpoint to return the same data for the same requests.

The CLI provides you with automatic regression tests any time you try to push the same version of a Pipe.

Let’s see it with an example. Imagine you have this version of the top_products Pipe:

TOKEN "read_token" READ

NODE endpoint

DESCRIPTION >

returns top 10 products for the last week

SQL >

%

select date, arraySort(x -> x, topKMerge(10)(top_10)) as top_10

from top_products_view

where

date BETWEEN {{ Date(date_start) }}

AND {{ Date(date_end) }}

and action = 'buy'

group by date

And you want to parameterize the date filter to include default values:

TOKEN "read_token" READ

NODE endpoint

DESCRIPTION >

returns top 10 products for the last week

SQL >

%

select date, arraySort(x -> x, topKMerge(10)(top_10)) as top_10

from top_products_view

where

date BETWEEN {{ Date(date_start, '2020-04-23') }}

AND {{ Date(date_end, '2020-04-24') }}

and action = 'buy'

group by date

The params date_start and date_end now have default values. That means by default, the behaviour of the endpoint should be the same.

To illustrate the example, send some requests with different parameter values to the API Endpoint:

curl https://api.tinybird.co/v0/pipes/top_products.json?token={TOKEN}&date_start=2020-04-23&date_end=2020-04-24

curl https://api.tinybird.co/v0/pipes/top_products.json?token={TOKEN}&date_start=2020-04-23&date_end=2020-04-23

curl https://api.tinybird.co/v0/pipes/top_products.json?token={TOKEN}&date_start=2020-04-24&date_end=2020-04-24

Now, you’d create a new Pull Request like this with the change above so the API endpoint is tested for regressions.

On the Continuous Integration pipeline, changes are deployed to an Environment and then there’s a Run pipe regression tests step that runs the following command:

tb env regression-tests coverage --wait

It creates a Job that runs the coverage of the API Endpoints. The Job tests all combinations of parameters by running at least one request for each combination, and comparing the results of the new and old version of the Pipe.

OK - top_products_params(coverage) - ***/v0/pipes/top_products_params.json?start=2021-01-01&end=2023-12-12&pipe_checker=true - 0.093s (-3.0%) 0 bytes (0%)

OK - top_products(coverage) - ***/v0/pipes/top_products.json?date_start=2020-04-24&date_end=2020-04-25&q=SELECT+%0A++date%2C%0A++count%28%29+total%0AFROM+top_products%0AGROUP+BY+date%0AHAVING+total+%3C+0%0A&cli_version=1.0.0b410+%28rev+145e3d7%29&pipe_checker=true - 0.091s (-8.0%) 88 bytes (0.0%)

OK - top_products(coverage) - ***/v0/pipes/top_products.json?date_start=2020-04-24&date_end=2020-04-24&cli_version=1.0.0b410+%28rev+145e3d7%29&pipe_checker=true - 0.098s (15.0%) 12.42 KB (0.0%)

The regression test will also display the Performance metrics of the new vs old Pipe, so we can detect if the new endpoint has any improvement or degradation in performance.

==== Performance metrics ====

--------------------------------------------------------------------

| top_products(coverage) | Origin | Environment | Delta |

--------------------------------------------------------------------

| min response time | 0.086 seconds | 0.091 seconds | +5.45 % |

| max response time | 0.099 seconds | 0.098 seconds | -0.16 % |

| mean response time | 0.092 seconds | 0.094 seconds | +2.45 % |

| median response time | 0.092 seconds | 0.094 seconds | +2.45 % |

| p90 response time | 0.099 seconds | 0.098 seconds | -0.16 % |

| min read bytes | 88 bytes | 88 bytes | +0.0 % |

| max read bytes | 12.42 KB | 12.42 KB | +0.0 % |

| mean read bytes | 6.25 KB | 6.25 KB | +0.0 % |

| median read bytes | 6.25 KB | 6.25 KB | +0.0 % |

| p90 read bytes | 12.42 KB | 12.42 KB | +0.0 % |

--------------------------------------------------------------------

==== Results Summary ====

-------------------------------------------------------------------------------------------------

| Endpoint | Test | Run | Passed | Failed | Mean response time | Mean read bytes |

-------------------------------------------------------------------------------------------------

| sales | coverage | 0 | 0 | 0 | +0.0 % | +0.0 % |

| top_products_agg | coverage | 0 | 0 | 0 | +0.0 % | +0.0 % |

| top_products_params | coverage | 1 | 1 | 0 | -3.34 % | +0.0 % |

| top_products | coverage | 2 | 2 | 0 | +2.45 % | +0.0 % |

-------------------------------------------------------------------------------------------------

In this case, we can see there’s no regression since the API endpoint behaves the same.

As a test, let’s introduce a breaking change in the Pipe definition by changing a filter:

TOKEN "read_token" READ

NODE endpoint

DESCRIPTION >

returns top 10 products for the last week

SQL >

%

select date, arraySort(x -> x, topKMerge(10)(top_10)) as top_10

from top_products_view

where

date BETWEEN {{ Date(date_start, '2020-04-23') }}

AND {{ Date(date_end, '2020-04-24') }}

and action = 'error'

group by date

Now, create a new Pull Request with the change above so the API endpoint is tested for regressions.

Same procedure as above but with this output:

FAIL - top_products(coverage) - ***/v0/pipes/top_products.json?date_start=2020-04-24&date_end=2020-04-24&cli_version=1.0.0b410+%28rev+145e3d7%29&pipe_checker=true - 0.03s (7.0%) 78 bytes (-99.0%)

==== Failures Detail ====

❌ top_products(coverage) - ***/v0/pipes/top_products.json?date_start=2020-04-24&date_end=2020-04-24&cli_version=1.0.0b410+%28rev+145e3d7%29&pipe_checker=true

** 1 != 0 : Unexpected number of result rows count, this might indicate regression.

💡 Hint: Use `--no-assert-result-rows-count` if it's expected and want to skip the assert.

There’s one of the requests failing, that means the result for the version of the API endpoint in the Main Workspace is different than the version we are deploying in the Pull Request.

In this case, the error is about a different output, since we changed the filter it makes sense.

Performance metrics also indicate that the output of both API endpoints, the one in Main and the one being deployed, don’t match. You can see it in the read bytes metrics.

==== Performance metrics ====

---------------------------------------------------------------------

| top_products(coverage) | Origin | Environment | Delta |

---------------------------------------------------------------------

| min response time | 0.028 seconds | 0.027 seconds | -1.09 % |

| max response time | 0.032 seconds | 0.03 seconds | -7.57 % |

| mean response time | 0.03 seconds | 0.029 seconds | -4.57 % |

| median response time | 0.03 seconds | 0.029 seconds | -4.57 % |

| p90 response time | 0.032 seconds | 0.03 seconds | -7.57 % |

| min read bytes | 88 bytes | 78 bytes | -11.36 % |

| max read bytes | 12.42 KB | 78 bytes | -99.37 % |

| mean read bytes | 6.25 KB | 78 bytes | -98.75 % |

| median read bytes | 6.25 KB | 78.0 bytes | -98.75 % |

| p90 read bytes | 12.42 KB | 78 bytes | -99.37 % |

---------------------------------------------------------------------

==== Results Summary ====

-------------------------------------------------------------------------------------------------

| Endpoint | Test | Run | Passed | Failed | Mean response time | Mean read bytes |

-------------------------------------------------------------------------------------------------

| sales | coverage | 0 | 0 | 0 | +0.0 % | +0.0 % |

| top_products_agg | coverage | 0 | 0 | 0 | +0.0 % | +0.0 % |

| top_products_params | coverage | 1 | 1 | 0 | -0.4 % | +0.0 % |

| top_products | coverage | 2 | 1 | 1 | -4.57 % | -98.75 % |

-------------------------------------------------------------------------------------------------

Since the API endpoint filter changed, the response changed for the requests, so the regression testing warns you about it preventing a possible regression.

Now, you have one of two options:

Create a new version of the API endpoint as described in the versioning your Pipes guide.

If you are sure the new response is correct, and don’t consider this change a regression, you can skip regression testing to override the Pipe as described in this guide.

How regression tests work¶

When we run tb env regression-tests coverage --wait to check the changes of Pipe against the existing one, the CLI creates a job to run regression tests to validate you are not unintentionally breaking backward compatibility.

The regression test funcionality is powered by tinybird.pipe_stats_rt, one of the service Data Sources that are available to you out of the box. You can find more information about these service Data Sources here.

In this case, a query is run against tinybird.pipe_stats_rt to try to gather all the combination of parameters you are using in an API Endpoint. This way we have coverage that all the possible combinations have been validated at least once.

SELECT

## Using this function we extract all the parameters used in each requests

extractURLParameterNames(assumeNotNull(url)) as params,

## According to the option `--sample-by-params`, we run one query for each combination of parameters or more

groupArraySample({sample_by_params if sample_by_params > 0 else 1})(url) as endpoint_url

FROM tinybird.pipe_stats_rt

WHERE

pipe_name = '{pipe_name}'

## According to the option `--match`, we will filter only the requests that contain that parameter

## This is specially useful when you want to validate a new parameter you want to introduce or you have optimize the endpoint in that specific case

{ " AND " + " AND ".join([f"has(params, '{match}')" for match in matches]) if matches and len(matches) > 0 else ''}

GROUP BY params

FORMAT JSON

If you have an endpoint with millions of requests per day, we can fallback to a list:

WITH

## Using this function we extract all the parameters used in each requests

extractURLParameterNames(assumeNotNull(url)) as params

SELECT url

FROM tinybird.pipe_stats_rt

WHERE

pipe_name = '{pipe_name}'

## According to the option `--match`, we will filter only the requests that contain that parameter

## This is specially useful when you want to validate a new parameter you want to introduce or you have optimize the endpoint in that specific case

{ " AND " + " AND ".join([f"has(params, '{match}')" for match in matches]) if matches and len(matches) > 0 else ''}

## According to the option `--limit` by default 100

LIMIT {limit}

FORMAT JSON

Data Quality Tests¶

Data quality tests are meant to cover scenarios that don’t have to happen in your production data. For example, you can check that the data is not empty, or that the data is not duplicated.

Data quality tests are run with the tb test command. You should include as many YAML files in the tests directory of your Data Project.

You can get use of them following two strategies:

Run them periodically over your Main Workspace, that is, production data.

Use them as part of your Test Suite in Continuous Integration with a Data Branch or fixtures.

For instance, given the ecommerce_data_project let’s say we want to validate that:

There are no negative sales.

There are products sold every day.

We’ll create a tests/default.yaml as in this example

- no_negative_sales:

max_bytes_read: null

max_time: null

sql: |

SELECT

date,

sumMerge(total_sales) total_sales

FROM top_products_view

GROUP BY date

HAVING total_sales < 0

You can also perform validations over pipe endpoints.

Following ecommerce_data_project example, we want to validate that top_products_params endpoint:

Does not return empty

top_10products on 2023

We need to add to tests/default.yaml.

- products_by_date:

max_bytes_read: null

max_time: null

sql: |

SELECT

date,

count() total

FROM top_products

GROUP BY date

HAVING total < 0

pipe:

name: top_products

params:

date_start: '2020-04-24'

date_end: '2020-04-25'

Then they are run in Continuous Integration with tb test run -v

tb test run -v

----------------------------------------------------------------------

| file | test | status | elapsed |

----------------------------------------------------------------------

| ./tests/default.yaml | no_negative_sales | Pass | 0.001300466 ms |

| ./tests/default.yaml | products_by_date | Pass | 0.000197256 ms |

----------------------------------------------------------------------

Fixture Tests¶

Regression tests confirm backwards compatibility of your API endpoints when overwriting them and data quality tests cover scenarios that might not happen with test or production data. There are times when you have to cover a very specific scenario or a use case that is being developed and you don’t have production data for it. In those cases you should use fixture tests.

To configure fixture testing you need:

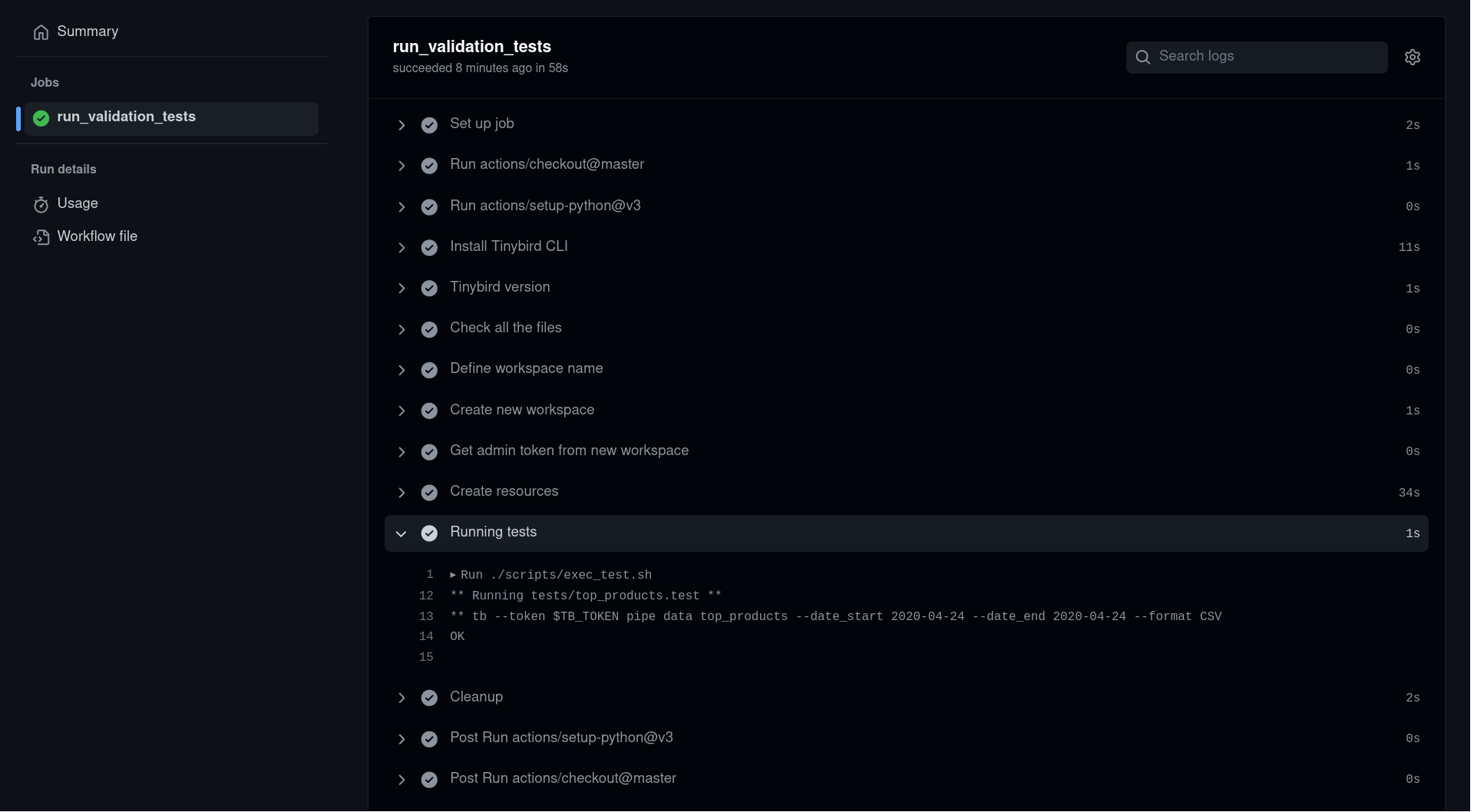

A script to run fixture tests, like this. The script is automatically created when you connect your Workspace to Git

Data fixtures: These are data files placed in the

datasources/fixturesfolder of the Data Project. Their name has to match a name of a Data Source, see an example file.Fixture tests in the

testsfolder.

In the Continuous Integration job, after an Environment is created, the changed files in the Pull Request are deployed with this command tb deploy --fixtures --populate --wait. The --fixtures flag automatically append all files in datasources/fixtures only if they match the name of a Data Source.

To effectively use data fixtures you should:

Use data that do not collide with production data, to avoid unexpected results in regression testing.

Use only data fixtures for landing Data Sources since Materialized Views are automatically populated.

Once you have set up your data fixtures, it’s time to write fixture tests. Fixture tests are placed inside the tests folder of the Data Project, you can have subfolders to better organize the tests by module or API Endpoint.

Each fixture test needs two files:

One to indicate a request to an API Endpoint with this naming convention

<test_name>.testOne to indicate the exact response to the API Endpoint with this naming convention

<test_name>.test.result

For instance, to test the output of the top_products endpoint, create a top_products.test fixture test with this content:

tb pipe data top_products --date_start 2020-04-24 --date_end 2020-04-24 --format CSV

The test makes a request to the top_products API endpoint passing the date_start and date_end parameters and the response is CSV

Now we need a top_products.test.result with the expected result given our data fixtures:

"date","top_10"

"2020-04-24","['sku_0001','sku_0002','sku_0003','sku_0004']"

With this approach, you can have your tests for your Data Project integrated into your development process. Fixture tests are run as part of the Continuous Integration pipeline and the Job fails if the tests fail.